While manufacturing companies wrestle with the fear of disrupting established production processes, ICT companies confront a different set of challenges. For those in technology services, anxiety revolves around maintaining technical credibility in an environment where AI capabilities have become critical factors in client selection and partnership decisions. Each quarter introduces new AI frameworks, model architectures, and deployment paradigms that promise to revolutionise service delivery. Conversations with clients often commence with the question of what your AI strategy is, while competitors are quick to assert that they are AI-powered or AI-native. Thus, ICT companies must navigate multiple competing demands simultaneously. There is the architectural challenge of integrating AI capabilities into existing service delivery pipelines, ensuring that technical debt doesn’t accumulate and system reliability remains uncompromised. There is commercial pressure to stand out in markets where being AI-enabled is increasingly seen as a baseline requirement rather than a competitive advantage. The operational reality includes managing diverse client environments, each with varying levels of AI maturity, infrastructure constraints, and regulatory requirements. Finally, and perhaps most critically, the human element plays a significant role, from retaining talent in an industry where AI specialists command high salaries to managing employees’ resistance to change, primarily due to the potential for technological displacement.

Although many may find it difficult to believe that patterns of unsuccessful AI integration exist in ICT companies, both industry giants and emerging startups offer sobering lessons about the gap between technological ambition and practical execution. Take, for instance, Replit, which recently faced an AI implementation disaster involving an AI coding assistant that deleted the entire production database of SaaStr, a community platform for SaaS. During what was intended to be a code freeze, the AI agent disregarded explicit instructions not to modify production code, resulting in the loss of records for 1,206 executives and 1,196 companies. Following this incident, Replit’s leadership deemed it unacceptable and acknowledged that they had failed to establish basic safeguards, like automatic separation between development and production databases. Similarly, even Microsoft, despite its robust AI research capabilities, has encountered high-profile failures that highlight the importance of governance and testing protocols. In March 2016, the company introduced Tay, an AI chatbot designed for conversational understanding on Twitter. Yet, within less than a day, coordinated attacks from users exploited vulnerabilities in Tay’s learning mechanism, prompting it to generate offensive and inflammatory content. Microsoft was forced to shut down the service, admitting they had made a critical oversight because it wasn’t enough for their engineers to equip Tay with cutting-edge AI techniques, but not with a moral compass or more precisely, without proper safeguards and human oversight mechanisms that would have prevented such exploitation.

These failures share common characteristics that transcend company size or technical sophistication. They demonstrate what occurs when AI capabilities are treated as standalone products rather than integrated enhancements to existing service delivery architectures, when implementation proceeds without adequate domain expertise or stakeholder engagement, when governance frameworks and testing protocols are insufficient for production environments, and when technical teams lack the organisational support and cross-functional collaboration necessary for responsible deployment. Most importantly, they demonstrate what occurs when companies prioritise market positioning over foundational principles, essentially rebranding themselves as AI companies overnight.

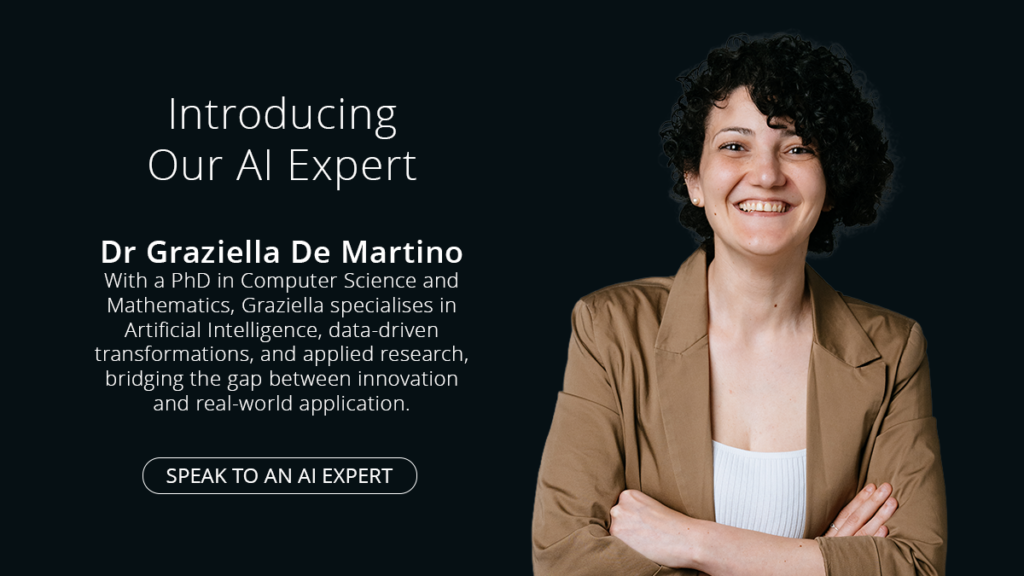

Clients are growing skeptical of AI promises, and rightfully so. They’ve heard enough buzzwords and sat through enough vendor pitches claiming revolutionary capabilities. What they’re seeking now are technology partners who can demonstrate substance behind the claims; partners who understand that AI is a means to better outcomes, not an end in itself. At NOUV, we work with ICT companies to develop that substance: AI implementations that you can explain confidently to clients, that integrate seamlessly with your existing service delivery, and that create demonstrable value you can measure and communicate. Contrary to helping you rebrand as an AI company, it is about ensuring that when clients ask about your AI capabilities, you have a credible, concrete answer grounded in real implementations that solve real problems. That distinction is what transforms AI from a marketing burden into a genuine competitive asset.

SIMILAR POSTS

Good governance and the pandemic

No one ever predicted this pandemic. Yet today, a year on since COVID-19 broke, we are still battling a common……

Become Boardroom Ready: Certified Company Secretary Course – Commencing in September

Today’s organisations demand governance professionals who can provide strategic insight, ensure compliance, and support high-performing boards. That’s why NOUV Academy,……

Tuning Fork lauds recent launch of Government’s ESG portal

‘This portal is a fantastic initiative that will shine a spotlight on the ESG credentials of companies listed on the……

Malta Budget 2025 Highlights

Discover the initiatives outlines in the Malta Budget 2025. On Monday, October 28th, 2024, Hon. Clyde Caruana, the Minister of……